Report: Tech Vendors: Product and Relationship Satisfaction, 2017

January 17, 2017 Leave a comment

We just published a Temkin Group data snapshot, Tech Vendors: Product and Relationship Satisfaction of IT Clients, 2017.

We just published a Temkin Group data snapshot, Tech Vendors: Product and Relationship Satisfaction of IT Clients, 2017.

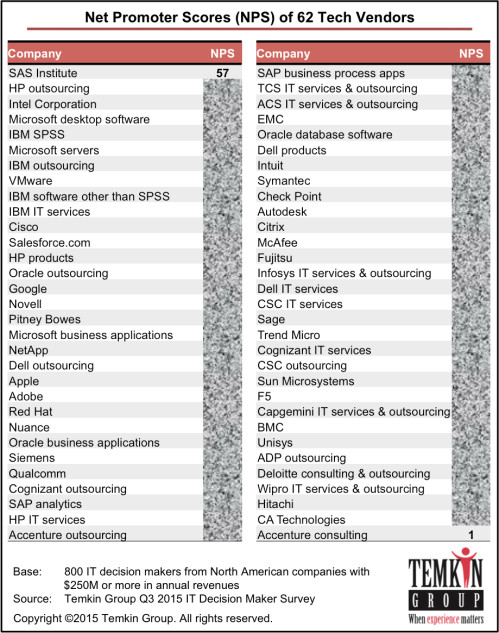

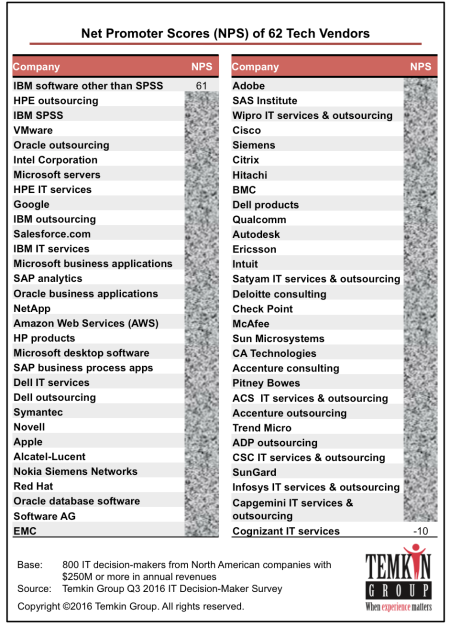

During Q3 of 2016, we surveyed 800 IT decision-makers from companies with at least $250 million in annual revenues, asking them to rate both the products of and their relationships with 62 different tech vendors. HPE outsourcing, Google, and IBM SPSS earned the top overall scores, while Trend Micro, Infosys, and SunGard received the lowest overall scores. To determine their product rating, we evaluated tech vendors across four product/service criteria: features, quality, flexibility, and ease of use. And we calculated their relationship rating using four different criteria: technical support, support of the account team, cost of ownership, and innovation of company. We also looked at how the average product and relationship scores of tech vendors have changed over the previous three years.

This research has a report (.pdf) and a dataset (excel). The dataset has the details of Product/Service and Relationship satisfaction for the 62 tech vendors as well as for several tech vendors with sample sizes too small to be included in the published report.

Download report for $495

(includes Excel spreadsheet with data)

![]()

Here’s a link to last year’s study.

The research examines eight areas of satisfaction; four that deal with products & services and four that examine relationships. Tech vendors earned the highest average satisfaction level for product features (64%) and the lowest for total cost of ownership (57%).

As you can see in the chart below, the overall product/service & relationship satisfaction ranges from a high of 76% for HPE outsourcing down to a low of 42% for Trend Micro.

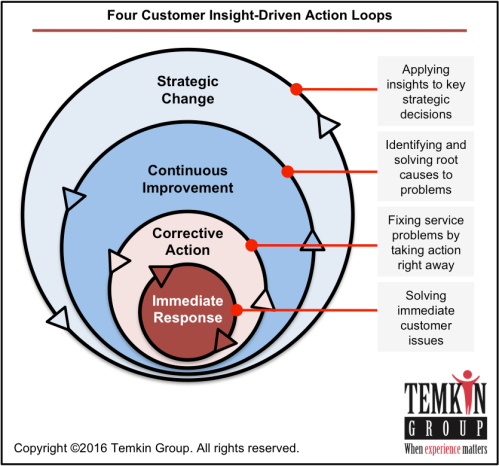

Here’s an example of the four loops for a restaurant chain:

Here’s an example of the four loops for a restaurant chain: