Tracking Your Top 10 Dissatisfaction Drivers

April 29, 2009 7 Comments

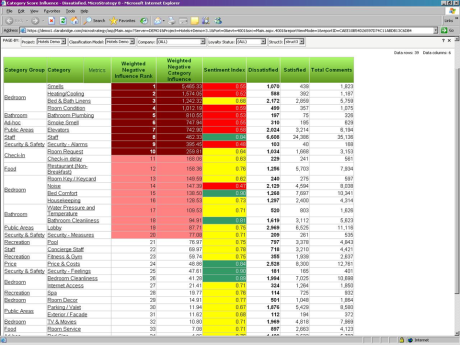

One of the trends with voice of the customer programs is the increasing analysis of unstructured data. To demonstrate the power of this information, I asked Clarabridge CEO, Sid Banerjee, to share one of his firm’s standard reports: “Negative Influence Report.” Companies can use this report to track and raise awareness about the top drivers for dissatisfaction.

Here’s how Banerjee described this report:

During the feedback collection process, it’s not uncommon for a company to collect two critical pieces of information:

- the customers’ overall assessment of the experience – typically a 1-5 score where 1=bad, 5=good experience.

- the customers’ open ended feedback on the experience – typically a few sentences to a few paragraphs of unstructured feedback.

The Clarabridge Negative Influence report correlates the negative experiences described in the open ended feedback (based on the specific categories of experiences that are described with accompanying negative sentiment) with a low score, and also weights the ranking of most negative influences based on frequency – how often a specific experience is most often associated with negative assessments.

- Total Comments – Count of the total comments for the given category

- Satisfied – Count of the scores at a 5 out of 5 level

- Dissatisfied – Count of the scores at the 1 – 3 out of 5 level

- Sentiment Index: A score derived from the sentiment of the comments in the given category. (Processed by the use of Clarabridge’s natural language processing engine) 1 is the highest possible sentiment (positive), and 0 would be the lowest (negative).

- Weighted Category Influence* – Weighing in the sentiment, dissatisfied volume, and category influence to determine the most important issues driving negativity, and presumably the top issues on which to focus.

- Weighted Influence Rank – A ranking of the weighted category influence.

*The weighted category influence uses a chi squared algorithm to provide the ranking. It measures whether a correlation exists between the category and a measurable distinction in the break out of satisfied/dissatisfied compared to the rest of the categories and also is weighted by the number of occurrences of the category. The higher the category influence score, the more likely that this category is a factor that drives people to dissatisfaction. This score does not indicate which direction this category will pull people towards, just whether or not it seems to have a measurable impact on people’s choice dissatisfaction.

The bottom line: Keep attacking your customer’s top problems

P.S. Clarabridge is not the only vendor that provides this type of analysis

Pingback: How you can track Your Customers Top 10 Dissatisfaction Drivers « Fredzimny’s CCCCC Blog

A similar analysis of customer contact registration can provide additional insight on exactly where the problem is.

Most CRM & Contact registration systems have the functionality for agents /customer services representatives to add some text-comments after finishing the contact. Analysing this information speciffically on those registered contacts that are mutliples (same customer, other contact reason) or repeat traffic (same customer, same contact reason) can give you good feedback that can confirm the root cause of customer dissatisfaction.

Wim Rampen

Good stuff Bruce. The key for this, like so many inputs, is the organization’s fortitude to take the next actions and drill down on the associated touch points and or associated processes to identify where they are breaking down. Then apply the resources – process improvement team, training, near real time information, better competitor awareness, etc. We don’t intentionally design processes to promote dissatisfaction, yet in practice they turn out that way. The eventual winners are those who recognize this and do something about it.

Great report. In my experience this type of report is most powerful when you leverage it within the construct of a process improvment methodology such as Six Sigma, Lean Thinking, or the Theory of Constraints. Ultimately, you have the customers’ perspectives of the emotional contraints in your business. This is important because the value of your product or service is dramatically reduced as the emotional engagement of your customer declines.

Hi Bruce – This data is difficult to comment on without understanding the basis for collecting the comments (i.e. what was the customer asked)? Example – was the customer asked to “score” the bedroom smells or were some customers actually stating they were satisfied because the bedrooms had a pleasnt smell or no smell at all. This makes a huge difference to the validity of the data and analysis.

If key driver analysis is really the requirement then how the question is asked is vital. A Net Promoter Score program with the question “why did you score us this way?” is a great way of getting to the key drivers behind promoters and detractors.

FYI, our Customer Intimacy Quadrant (with some assumptions on my part and using only the data presented) suggests the two issues in most need of attention are Bedroom Smells and Staff. I’m happy to discuss this further with yourself or Sid if you’re interested.

Regards, Neil

Neil – to address your question – typically a customer is asked 2 things:

1) Rate your OVERALL experience on a scale of 1-5 (this provides the basis for “satisfaction”

2) Describe your experience (open ended question).

When someone describes their experiences – they may or not be describing what caused the positive or negative experience in the free-form response. They will simply be describing their experience.

The text mining platform extracts ALL experiences from the text, and scores the experiences by sentiment, then correlates the experiences to dissatisfied customers. In effect – we’re doing the analysis to find out what negative experiences have the most correlation to dissatisfied customers.

I agree if you use a strict NPS methodology (which many organizations do use when they’re doing this type of analysis), and the open ended question asks a customer explain WHY they provided a negative score, you’re very likely to get a very causative list of issues. But even if the question is open ended, the beauty of the correlation metric is that it will still show negative experiences that co-occur with customer dissatisfaction. As Bruce stated in the blog: “This [negative influence] score does not indicate which direction this category will pull people towards, just whether or not it seems to have a measurable impact on people’s choice dissatisfaction.”

To James’ point – I absolutely agree – it’s not enough to just do the analysis – the way to make the analysis lead to improvement is to drill down into the issues, identify possible “fixes” to the experience, try the experience fixes, and monitor the changes in the negative influence scores, over time once fixes have been applied to the experience, to determine if the fixes are causing productive change in customer satisfaction. The report should be part of a customer experience process that aims for continuous monitoring, and continuous improvement. Change comes from action, not just analysis.

Hi everyone: Even though I’ve been too busy to comment lately, it’s great to see the back and forth on this post. Thanks for commenting.